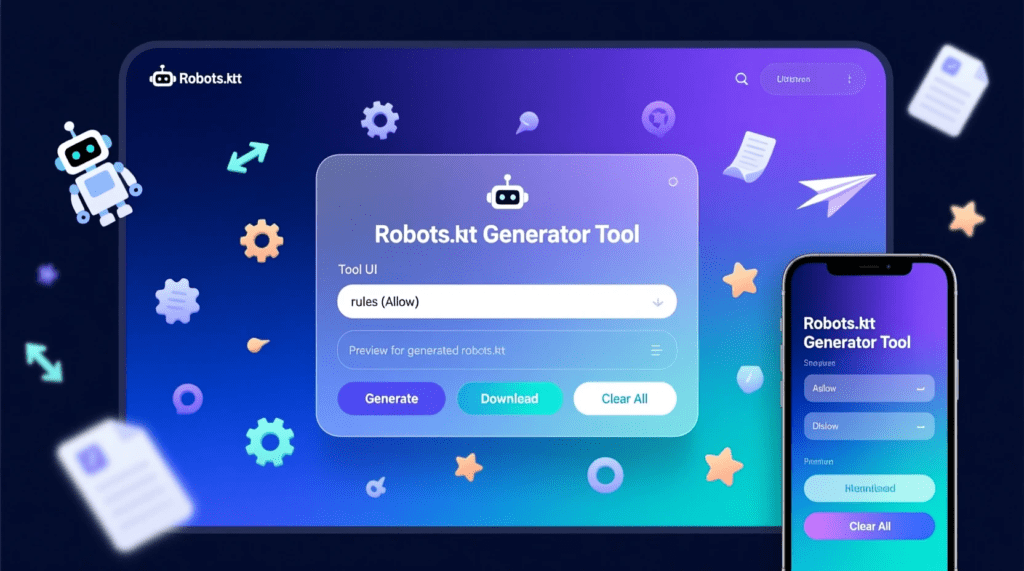

🤖 Robots.txt Generator

Create professional robots.txt files to control search engine crawling. Protect sensitive pages and optimize your crawl budget effectively.

Generated Robots.txt:

📑 Table of Contents

What is Robots.txt?

The robots.txt file is a text document placed in your website’s root directory that instructs search engine crawlers which pages or sections they can and cannot access. This file follows the Robots Exclusion Protocol, a standard recognized by all major search engines. Using a robots.txt generator ensures your file follows proper syntax and conventions.

A robots.txt generator creates this critical file automatically, eliminating syntax errors and ensuring compatibility with search engine guidelines. Every website should have a properly configured robots.txt generator file to manage crawl behavior effectively.

Why Use a Robots.txt Generator?

Creating a robots.txt generator file manually requires understanding specific syntax rules and potential pitfalls. A robots.txt generator automates this process, preventing common mistakes that could accidentally block important pages or fail to protect sensitive content.

Prevent Indexing Sensitive Content

Administrative panels, user account pages, and internal search results should not appear in search engines. A robots.txt generator helps you quickly block these areas, protecting your site’s security and user privacy.

Optimize Crawl Budget

Search engines allocate limited crawling resources to each website. By using a robots.txt generator to block unimportant pages like filter pages or duplicate content, you ensure crawlers focus on your most valuable pages, improving overall indexing efficiency.

Avoid Common Syntax Errors

A single typo in your robots.txt generator file can have serious consequences, either blocking your entire site or failing to protect sensitive pages. A reliable robots.txt generator eliminates these risks by creating syntactically perfect files of robots.txt every time.

How to Use the Robots.txt Generator

Our free robots.txt generator tool simplifies the creation process through an intuitive interface. Follow these steps to Robots.txt Generator your robots.txt file:

Step 1: Add Your Sitemap URL

Enter your XML sitemap URL in the designated field. The robots.txt generator will include this reference, helping search engines discover your sitemap location easily. This improves crawl efficiency and indexing speed.

Step 2: Select Directories to Block

Check the boxes for common directories you want to block from crawling. The robots.txt generator includes popular options like admin panels, WordPress directories, and temporary folders. This protects sensitive areas with a single click.

Step 3: Add Custom Paths

If you need to block specific pages or sections not listed in the checkboxes, enter them in the custom paths field of Robots.txt file. The Robots.txt generator accepts multiple paths separated by commas, making it easy to protect any area of your site.

Step 4: Set Crawl Delay (Optional)

For high-traffic sites or shared hosting environments, setting a crawl delay prevents server overload. The Robots.txt generator adds this directive, asking crawlers to wait between requests and reducing server strain.

Step 5: Generate and Download

Click the generate button and the Robots.txt generator creates your file instantly. Download it and upload to your website’s root directory (the same location as your homepage). Test it using Google Search Console’s Robots.txt tester.

Robots.txt Syntax and Rules

User-Agent Directive

The User-agent directive specifies which crawler the rules apply to. Using “*” targets all crawlers, while specific names like “Googlebot” target individual search engines. A robots.txt generator properly formats these directives, ensuring broad or targeted control as needed.

Disallow Directive

The Disallow directive tells crawlers which paths to avoid. Listing “/admin/” blocks the entire admin directory and all subdirectories. An empty Disallow directive (Disallow:) with no path means no restrictions. The robots.txt generator handles these rules correctly.

Allow Directive

The Allow directive creates exceptions to Disallow rules. For example, you might disallow an entire directory but allow specific robots.txt files within it. While not all crawlers support Allow directives, major search engines do. A comprehensive robots.txt generator includes Allow support when needed.

Sitemap Directive

The Sitemap directive tells search engines where to find your XML sitemap. Unlike other directives, this is not crawler-specific and should appear outside User-agent blocks. Every robots.txt generator should include this important directive.

Robots.txt Best Practices

Never Block Your Entire Site

Using “Disallow: /” blocks your entire website from search engines, removing you from search results completely. This is rarely intentional but can happen through configuration mistakes. A good robots.txt generator warns against this catastrophic error.

Don’t Use Robots.txt for Privacy

While a robots.txt generator can block pages from crawling, these pages can still be discovered through external links and appear in search results. For true privacy protection, use noindex meta tags or password protection instead of relying solely on robots.txt.

Case Sensitivity Matters

Path directives in robots.txt are case-sensitive. “/Admin/” and “/admin/” are different paths. A reliable robots.txt generator accounts for this, but always verify your directory names match exactly.

Test Before Deploying

After using a robots.txt generator, always test your file using Google Search Console’s robots.txt tester tool. This validates syntax and shows exactly which pages are blocked, helping you catch errors before they impact SEO.

Regular Maintenance

Review and update your robots.txt file regularly as your site evolves. When adding new sections or restructuring your site, regenerate your robots.txt using a robots.txt generator to ensure new content is properly managed.

Testing and Validating Robots.txt

Google Search Console Testing

Google Search Console offers a robots.txt tester that validates syntax and tests specific URLs against your rules. After creating a file with a robots.txt generator, use this tool to verify everything works as intended before deployment.

Common Validation Errors

Watch for syntax errors like missing colons, incorrect spacing, or typos in directives. These mistakes can render your entire robots.txt file ineffective. Using a robots.txt generator eliminates most syntax errors, but always validate the final output.

Testing Specific URLs

Test individual URLs to confirm they’re blocked or allowed as expected. Enter URLs into the tester provided by Google Search Console or similar tools. This catches logic errors that might not be obvious from reviewing the robots.txt generator output alone.

Frequently Asked Questions

Protect your website effectively with our free robots.txt generator. For more technical SEO guidance, explore Pinterest technical SEO tips.